(10/13)

A Giant Staring Eye, made with Anton for the International Conference on Social Robotics, 2013.

In 2010, Ben Winstone & I built a simple motion-tracking eyeball to show at Uncraftivism in the Arnolfini. It was a single animatronic 75mm eyeball in a case, with a very simple vision system that kept it looking at anything that moved. See for video and more detail.

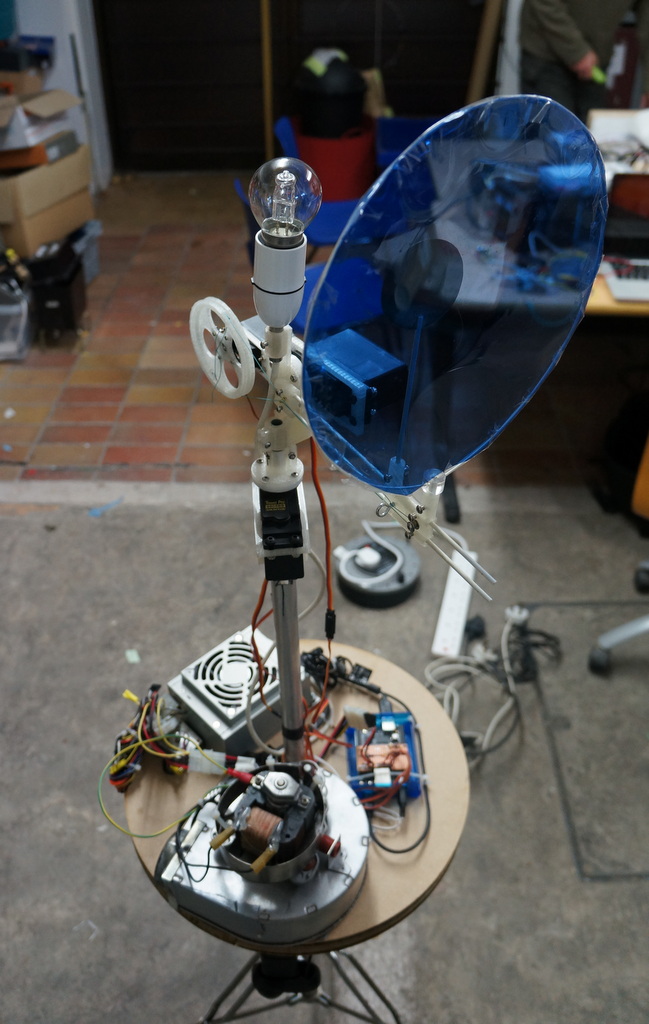

Anton & I wanted to do a similar thing, but with better tracking and on a grander scale. Hence, the Giant Staring Eye.

The International Conference on Social Robotics provided a great motivation to get the thing finished, in the form of a competition.

And since then, the Eye has had some other excursions:

December 2013: dorkbot Cardiff #15

December 2013: The 2013 Model Engineer Exhibition At Sandown Park

August 2014: EMFCamp 2014

August 2015: Bristol Mini Maker Faire 2015

October 2015: Derby Mini Maker Faire 2015

Technical detail.

Mechanics

Mechanics

The eyeball itself is a sphere of white ripstop nylon. We drew a pattern for one gore with a spreadsheet, cut out 24 of them, and sewed them together. The bottom hem has a sleeve for a drawcord, and the top has a couple of 25mm holes for vents.

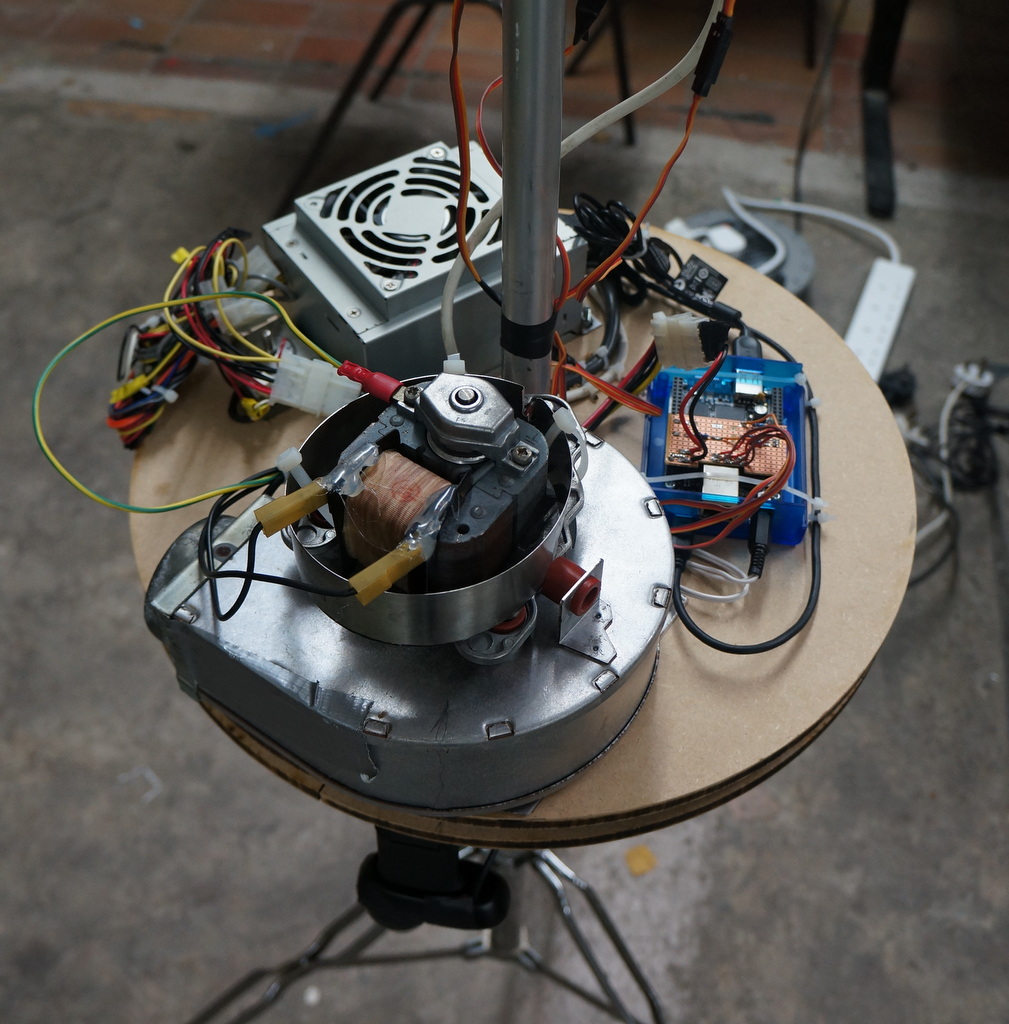

The baseplate is lasercut 9mm MDF. Many thanks to Andy at Your Laser for the cutting! A centrifugal blower (ex-combi-boiler) is mounted on top of the baseplate to provide inflation, along with an old PC power supply providing 5V for the BeagleBone and servos.

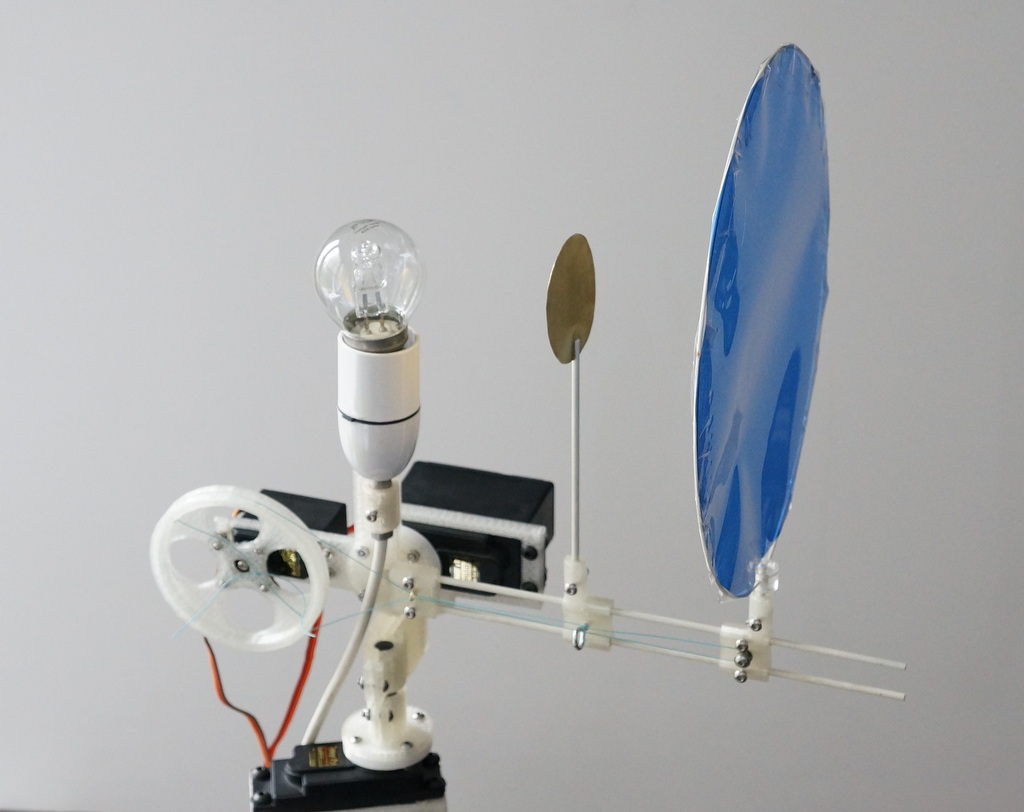

The central mechanism has three servos, for azimuth, altitude, and pupil size. The servos just move the iris and pupil shades around a central halogen bulb (chosen for a short filament, to get sharp shadows). The pupil shade is a disc of brass sheet, and moves along a radial rail to change the apparent pupil size. The iris shade is a disc of theatrical lighting gel from eBay, taped to a hoop of thin fiberglass rod. The rest of the assembly is all 3d-printed on my Rostock.

The pupil servo is a TowerPro 3003, and the alt and azimuth servos are TowerPro 9805BB. These are cheap and powerful, but they did sometimes hunt a bit, which led to a lot of comical oscillation. It would be worth buying better servos or even using steppers for the alt & azimuth axes.. They also don't have much more than 180 degrees of rotation, which is regrettable for the azimuth azis.

The mechanical designs (baseplate DXF, .scad and .stl files for the printed parts) are here.

Software

A BeagleBone Black runs the thing, with Python code using OpenCV for image processing and the Adafruit BBIO library for servo control.

We used this Debian 7.2 flasher (log in as root with password root)

Install tools:

sudo apt-get install x11-apps xterm vim-gtk libcanberra-gtk-module guvcview build-essential libopencv-dev python-opencv -y

sudo apt-get install build-essential python-dev python-setuptools python-pip python-smbus -y

And the Adafruit Python IO libraries (BBIO)

Also see the BBIO servo docs but note that their documentation seems to get the supported pins for servos wrong.

See the project source for the pins we used. Servos are wired to the BBB pins via 1k resistors.

The camera is a Logitech C270 with a Kogeto Dot lens mounted below the baseplate, That's a 360-degree lens sold as an iPhone accessory, which gives a distorted donut-shaped image of the panorama around the camera. We tracked motion directly on the donut, rather than trying to dewarp it. But if you did want to dewarp one, you might look here.

Home | Artefacts| Robots

Home | Artefacts| Robots